Drones are poised to be the future of warfare

Consumer-grade sUAS are inherently vulnerable to EW

Look beyond vision-based systems to assure PNT

From DC to the Donbas, drones are now universally recognized as the future of warfare. Today in Ukraine they are more deadly than artillery, accounting for anywhere up to eight in 10 Russian casualties on the frontlines.

If drones are that future, smaller first person view (FPV) drones will be the new infantry. Ukrainian FPV production alone has now scaled to 200,000 a month, while in Britain, supplies to the same frontlines will increase ten-fold this year.

Operators are quickly discovering these smaller devices can complete the same missions as larger UAVs – for a fraction of the price. The economics of the battlefield are shifting. Today, a four figure quadcopter is well-equipped enough to spot – and in some cases engage with – targets that would otherwise require a multimillion-dollar aircraft or targeted missile strike.

However, these same Class 1 and 2 drones remain incredibly attritable. In the first few months of the war in Ukraine, 90% of sUAS were grounded, surviving for an average between three and six flights. Two years on, not much has changed, with Ukraine neutralizing nearly 200 Russian sUAS via EW in a single night over Kyiv just this month (June 2025).

These cost-effective drones, while revolutionary, come with an Achilles’ heel: they are easy pickings for EW interference. This is a weakness that their larger, more sophisticated UAV counterparts are increasingly better equipped against.

With traditional anti jamming and spoofing devices such as nullers and beamformers too large, heavy, and power hungry for these Class 1-2 assets – units are being forced to get innovative. In Ukraine, some operators are now using 20 km long fiber optic tethers to create a hardwired relay between drone and operator.

The extreme nature of these workarounds not only show how widespread jamming has become, but also how reliant those in the field are now on these drones.

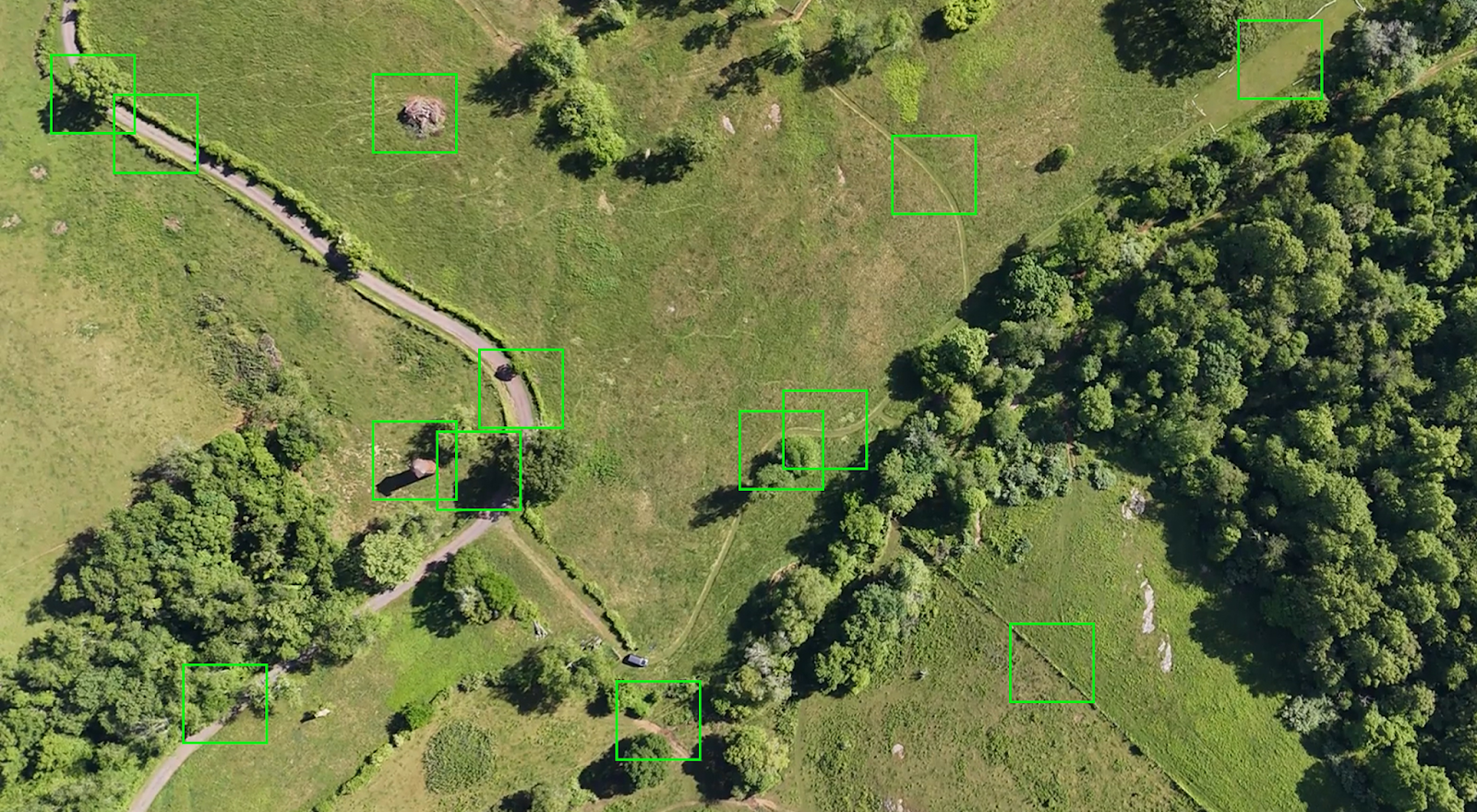

Software – specifically computer vision – has been a significant breakthrough in protecting sUAS. A FPV drones’ onboard camera is incredibly data-rich – and major alternative navigation providers are now leveraging those inputs in visual-based navigation (VBN) approaches to this critical problem space.

Optical flow, visual odometry, simultaneous localization and mapping (SLAM) are among the most promising methods; however, VBN-reliant systems are not without their drawbacks:

By definition, vision systems rely on visual cues. From the Arctic to the South China Sea – these are few and far between across most of today’s contested environments. Snow sheets, deserts and large bodies of water are all but devoid of tracking points.

In more dynamic environments, the opposite is also true. Moving subjects (cars, people, even swaying trees) trigger occlusion, and the camera is unable to ‘lock-on’ to reference features.

These active environments are often where these drones are most mission critical, but also where computer vision techniques are put under the most strain. External light and contrast are also needed for them to function successfully– but motion blur, low light, fog, midst, and rain can also all make that extremely difficult.

Even with high-quality reference matching, vision systems are still susceptible to drift. Visual odometry experiences error rates of a few percentile points over every few hundred meters, this increases significantly in beyond line-of-sight missions.

For FPV drones, one camera alone is not enough to accurately judge the ground scale. Without an absolute elevation reference (which is not always possible as depth sensors add additional weight), a change in the altitude can lead to a misscale in motion.

The greatest limitation on the speed and range of vision-based navigation systems is the level of computation required.

Relying on vision introduces latency in assured PNT – and an absence in real-time data will result in oscillation, and in the worst-case scenario lead to complete failure. Heavy and continuous vision processing also adds thermal constraints – and risks the on board processors overheating.

Despite these drawbacks – no one would question that vision data still represents a huge leap forward in equipping sUAS with alternative navigation sources for GPS degraded and denied environments. However, what also remains clear is that the longer the device is dependent on computer vision, the greater the risk placed on mission outcomes.

Pendulum’s approach to equipping drones with alternative PNT reduces that risk by significantly reducing the role of vision data in onboard processing.

Even Class 1 drones are equipped with the inertial sensors found on almost any consumer-grade device (barometer, altimeter, accelerometer, compass, among others). By prioritizing inertial propagation, and only using computer vision to close the loop on the projected positioning, this opportunistic approach stands to dramatically improve sUAS performance in contested settings.

Not only removing the need for additional hardware and sensors, lighter weight machine-learning models massively reduce the power draw on the drone itself. In the field, that means it can fly further, longer.

Computer vision can and will transform the future of drone navigation; however, in more contested, extreme environments, every gram of weight and watt of power counts. Multi-fusion methods that leverage inertial sensors at their core provide reliable, continuous PNT where camera data cannot always be relied on.

If you’d like to know more about Navigate for drones, reach out at navigate@pendulum.global.